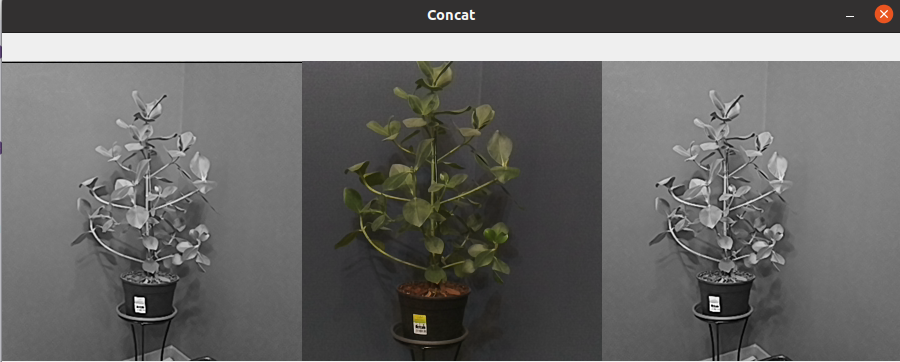

Multi-Input Frame Concatenation

Demo

Setup

Source code

Python

C++

Python

PythonGitHub

1#!/usr/bin/env python3

2

3from pathlib import Path

4import sys

5import numpy as np

6import cv2

7import depthai as dai

8SHAPE = 300

9

10# Get argument first

11nnPath = str((Path(__file__).parent / Path('../models/concat_openvino_2021.4_6shave.blob')).resolve().absolute())

12if len(sys.argv) > 1:

13 nnPath = sys.argv[1]

14

15if not Path(nnPath).exists():

16 import sys

17 raise FileNotFoundError(f'Required file/s not found, please run "{sys.executable} install_requirements.py"')

18

19p = dai.Pipeline()

20p.setOpenVINOVersion(dai.OpenVINO.VERSION_2021_4)

21

22camRgb = p.createColorCamera()

23camRgb.setPreviewSize(SHAPE, SHAPE)

24camRgb.setInterleaved(False)

25camRgb.setColorOrder(dai.ColorCameraProperties.ColorOrder.BGR)

26

27def create_mono(p, socket):

28 mono = p.create(dai.node.MonoCamera)

29 mono.setBoardSocket(socket)

30 mono.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

31

32 # ImageManip for cropping (face detection NN requires input image of 300x300) and to change frame type

33 manip = p.create(dai.node.ImageManip)

34 manip.initialConfig.setResize(300, 300)

35 manip.initialConfig.setFrameType(dai.RawImgFrame.Type.BGR888p)

36 mono.out.link(manip.inputImage)

37 return manip.out

38

39# NN that detects faces in the image

40nn = p.createNeuralNetwork()

41nn.setBlobPath(nnPath)

42nn.setNumInferenceThreads(2)

43

44camRgb.preview.link(nn.inputs['img2'])

45create_mono(p, dai.CameraBoardSocket.CAM_B).link(nn.inputs['img1'])

46create_mono(p, dai.CameraBoardSocket.CAM_C).link(nn.inputs['img3'])

47

48# Send bouding box from the NN to the host via XLink

49nn_xout = p.createXLinkOut()

50nn_xout.setStreamName("nn")

51nn.out.link(nn_xout.input)

52

53# Pipeline is defined, now we can connect to the device

54with dai.Device(p) as device:

55 qNn = device.getOutputQueue(name="nn", maxSize=4, blocking=False)

56 shape = (3, SHAPE, SHAPE * 3)

57

58 while True:

59 inNn = np.array(qNn.get().getFirstLayerFp16())

60 # Planar INT8 frame

61 frame = inNn.reshape(shape).astype(np.uint8).transpose(1, 2, 0)

62

63 cv2.imshow("Concat", frame)

64

65 if cv2.waitKey(1) == ord('q'):

66 breakPipeline

Need assistance?

Head over to Discussion Forum for technical support or any other questions you might have.