Resolution Techniques for NNs

- Input frame AR mismatch - when your NN model expects a different aspect ratio compared to the sensors aspect ratio

- Visualization of the NN output - when you want to visualize the NN output on higher resolution

Input frame AR mismatch

- Crop the ISP frame to 1:1 aspect ratio and lose some FOV

- Stretch the ISP frame to 1:1 aspect ratio of the NN

- Apply letterboxing to the ISP frame to get 1:1 aspect ratio frame

ImageManip node by setting the appropriate ResizeMode.

Crop

Python

1manip.initialConfig.setOutputSize(width, height, dai.ImageManipConfigV2.ResizeMode.CENTER_CROP)Letterbox

Python

1manip.initialConfig.setOutputSize(width, height, dai.ImageManipConfigV2.ResizeMode.LETTERBOX)Stretch

Python

1manip.initialConfig.setOutputSize(width, height, dai.ImageManipConfigV2.ResizeMode.STRETCH)Displaying detections in High-Res

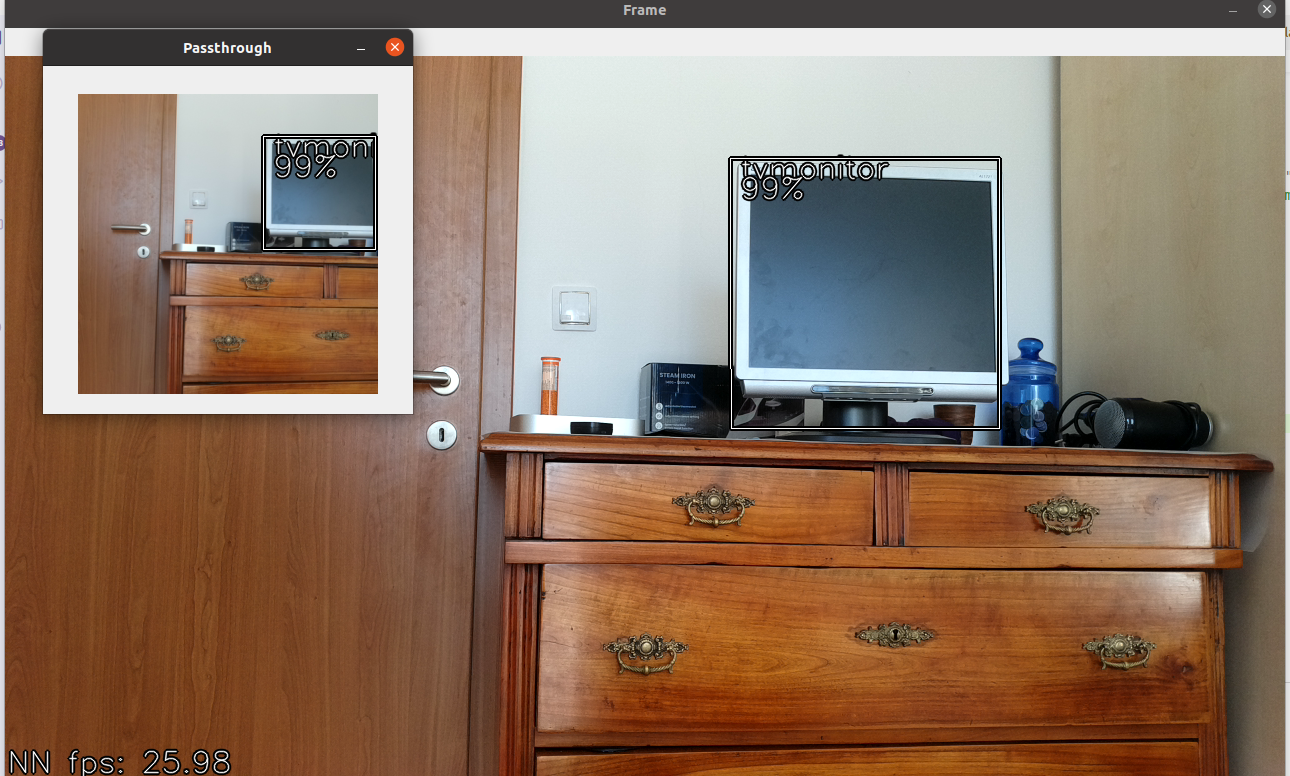

300x300 or 416x416). Instead of displaying bounding boxes on such small frames, you could also stream higher resolution frames (eg. video output from ColorCamera) and display bounding boxes on these high-res frames. There are several approaches to achieving that, and in this section we will take a look at them.Passthrough

passthrough frame of DetectionNetwork's output so bounding boxes are in sync with the frame. Other option would be to stream preview frames from ColorCamera and sync on the host (or don't sync at all). 300x300 frame with detections below. Demo code here.

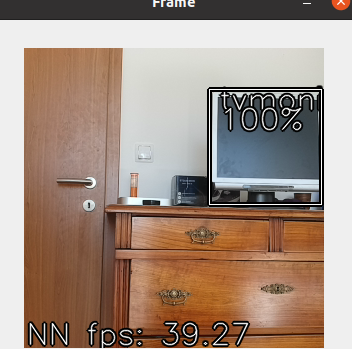

Crop high resolution frame

video output from ColorCamera) to the host, and draw bounding boxes to it. For bounding boxes to match the frame, preview and video sizes should have the same aspect ratio, so 1:1. In the example, we downscale 4k resolution to 720P, so maximum resolution is 720x720, which is exactly the resolution we used (camRgb.setVideoSize(720,720)). We could also use 1080P resolution and stream 1080x1080 frames back to the host. Demo code here.

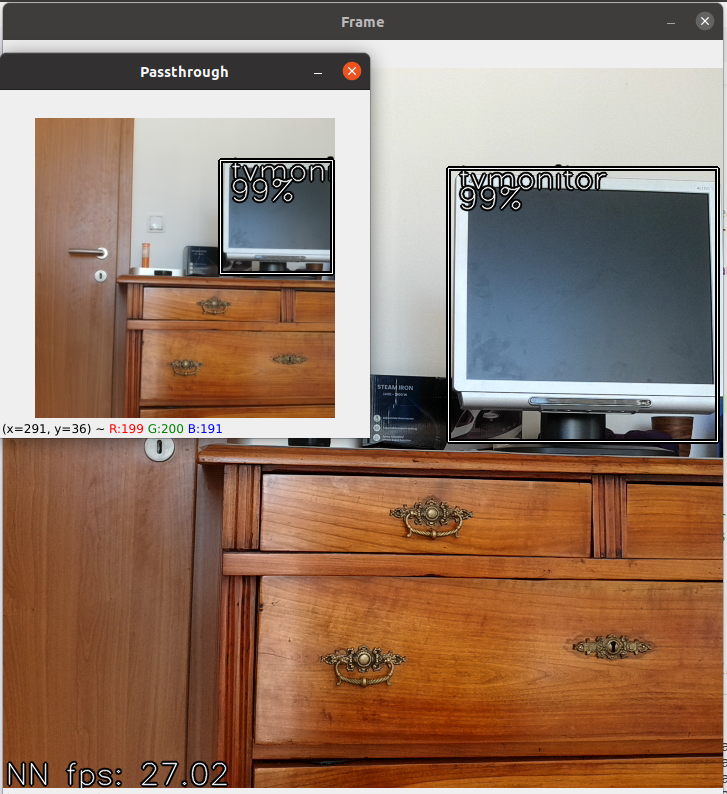

Stretch the frame

1:1, not eg. 16:9 as our camera resolution. This means that some of the FOV will be lost. Above (Input frame AR mismatch) we showcased that changing aspect ratio will preserve the whole FOV of the camera, but it will "squeeze"/"stretch" the frame, as you can see below. Demo code here.

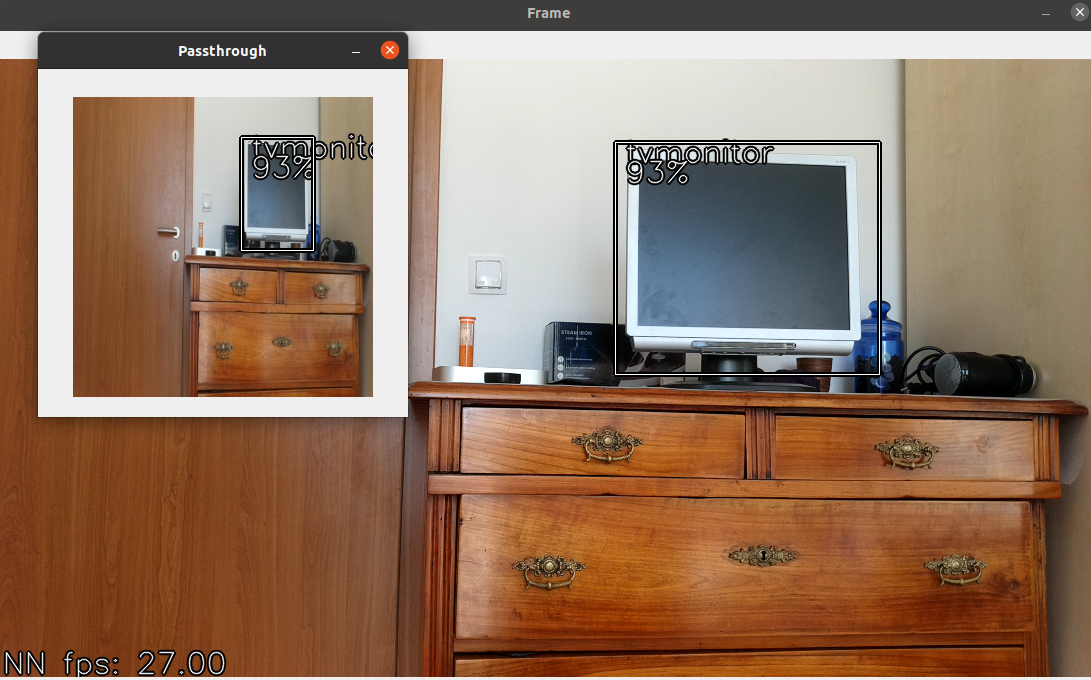

Edit bounding boxes

video from the device and do inferencing on 300x300 frames. This would, however, mean that we have to re-calculate bounding boxes to match with different aspect ratio of the image. This approach does not preserve the whole aspect ratio, it only displays bounding boxes on whole FOV video frames. Demo code here.