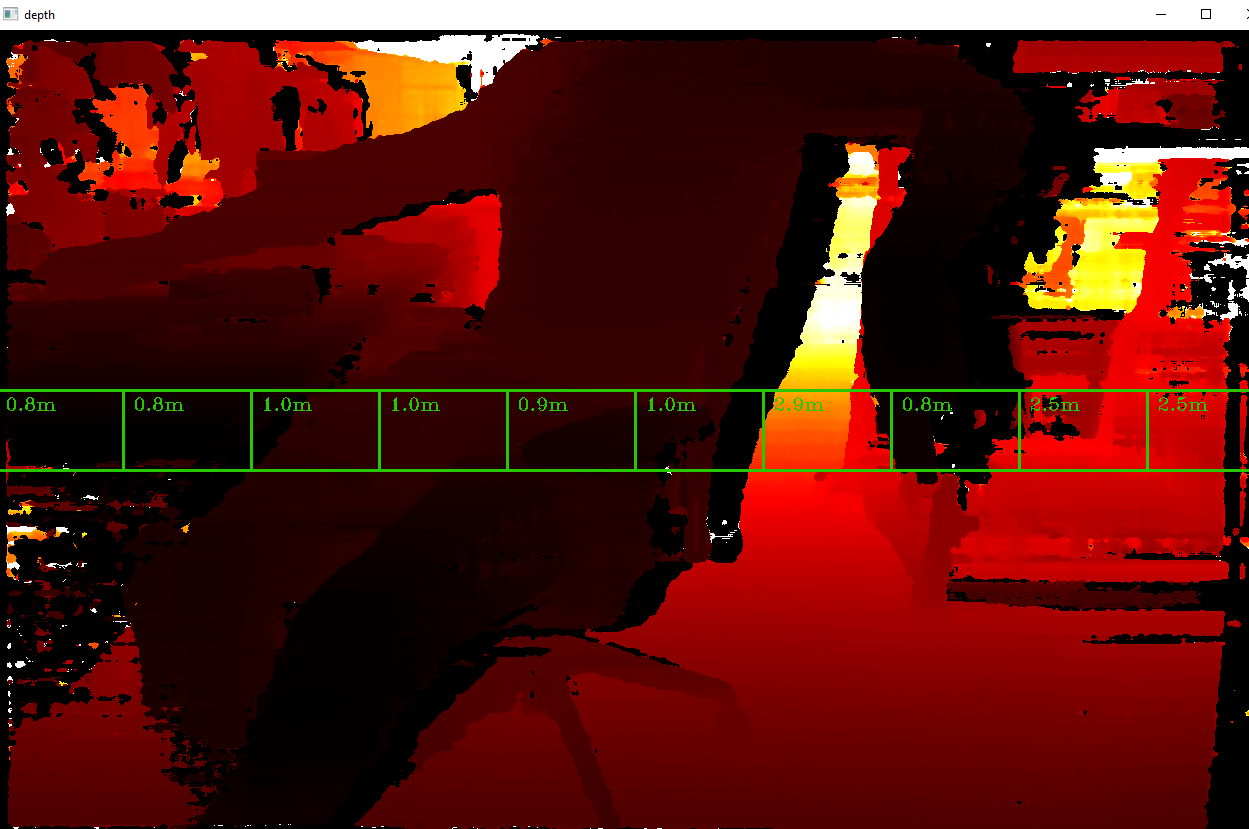

Spatial Calculator Multi-ROI

This example shows how one can use multiple ROIs with a single SpatialLocationCalculator node. A similar logic could be used as a simple depth line scanning camera for mobile robots.Similar samples:

Demo

Setup

Please run the install script to download all required dependencies. Please note that this script must be ran from git context, so you have to download the depthai-python repository first and then run the scriptCommand Line

1git clone https://github.com/luxonis/depthai-python.git

2cd depthai-python/examples

3python3 install_requirements.pySource code

Python

C++

Python

PythonGitHub

1#!/usr/bin/env python3

2

3import cv2

4import depthai as dai

5import math

6import numpy as np

7

8# Create pipeline

9pipeline = dai.Pipeline()

10

11# Define sources and outputs

12monoLeft = pipeline.create(dai.node.MonoCamera)

13monoRight = pipeline.create(dai.node.MonoCamera)

14stereo = pipeline.create(dai.node.StereoDepth)

15spatialLocationCalculator = pipeline.create(dai.node.SpatialLocationCalculator)

16

17xoutDepth = pipeline.create(dai.node.XLinkOut)

18xoutSpatialData = pipeline.create(dai.node.XLinkOut)

19xinSpatialCalcConfig = pipeline.create(dai.node.XLinkIn)

20

21xoutDepth.setStreamName("depth")

22xoutSpatialData.setStreamName("spatialData")

23xinSpatialCalcConfig.setStreamName("spatialCalcConfig")

24

25# Properties

26monoLeft.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

27monoLeft.setCamera("left")

28monoRight.setResolution(dai.MonoCameraProperties.SensorResolution.THE_400_P)

29monoRight.setCamera("right")

30

31stereo.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.HIGH_DENSITY)

32stereo.setLeftRightCheck(True)

33stereo.setSubpixel(True)

34spatialLocationCalculator.inputConfig.setWaitForMessage(False)

35

36# Create 10 ROIs

37for i in range(10):

38 config = dai.SpatialLocationCalculatorConfigData()

39 config.depthThresholds.lowerThreshold = 200

40 config.depthThresholds.upperThreshold = 10000

41 config.roi = dai.Rect(dai.Point2f(i*0.1, 0.45), dai.Point2f((i+1)*0.1, 0.55))

42 spatialLocationCalculator.initialConfig.addROI(config)

43

44# Linking

45monoLeft.out.link(stereo.left)

46monoRight.out.link(stereo.right)

47

48spatialLocationCalculator.passthroughDepth.link(xoutDepth.input)

49stereo.depth.link(spatialLocationCalculator.inputDepth)

50

51spatialLocationCalculator.out.link(xoutSpatialData.input)

52xinSpatialCalcConfig.out.link(spatialLocationCalculator.inputConfig)

53

54# Connect to device and start pipeline

55with dai.Device(pipeline) as device:

56 device.setIrLaserDotProjectorBrightness(1000)

57

58 # Output queue will be used to get the depth frames from the outputs defined above

59 depthQueue = device.getOutputQueue(name="depth", maxSize=4, blocking=False)

60 spatialCalcQueue = device.getOutputQueue(name="spatialData", maxSize=4, blocking=False)

61 color = (0,200,40)

62 fontType = cv2.FONT_HERSHEY_TRIPLEX

63

64 while True:

65 inDepth = depthQueue.get() # Blocking call, will wait until a new data has arrived

66

67 depthFrame = inDepth.getFrame() # depthFrame values are in millimeters

68

69 depth_downscaled = depthFrame[::4]

70 if np.all(depth_downscaled == 0):

71 min_depth = 0 # Set a default minimum depth value when all elements are zero

72 else:

73 min_depth = np.percentile(depth_downscaled[depth_downscaled != 0], 1)

74 max_depth = np.percentile(depth_downscaled, 99)

75 depthFrameColor = np.interp(depthFrame, (min_depth, max_depth), (0, 255)).astype(np.uint8)

76 depthFrameColor = cv2.applyColorMap(depthFrameColor, cv2.COLORMAP_HOT)

77

78 spatialData = spatialCalcQueue.get().getSpatialLocations()

79 for depthData in spatialData:

80 roi = depthData.config.roi

81 roi = roi.denormalize(width=depthFrameColor.shape[1], height=depthFrameColor.shape[0])

82

83 xmin = int(roi.topLeft().x)

84 ymin = int(roi.topLeft().y)

85 xmax = int(roi.bottomRight().x)

86 ymax = int(roi.bottomRight().y)

87

88 coords = depthData.spatialCoordinates

89 distance = math.sqrt(coords.x ** 2 + coords.y ** 2 + coords.z ** 2)

90

91 cv2.rectangle(depthFrameColor, (xmin, ymin), (xmax, ymax), color, thickness=2)

92 cv2.putText(depthFrameColor, "{:.1f}m".format(distance/1000), (xmin + 10, ymin + 20), fontType, 0.6, color)

93 # Show the frame

94 cv2.imshow("depth", depthFrameColor)

95

96 if cv2.waitKey(1) == ord('q'):

97 breakPipeline

Need assistance?

Head over to Discussion Forum for technical support or any other questions you might have.