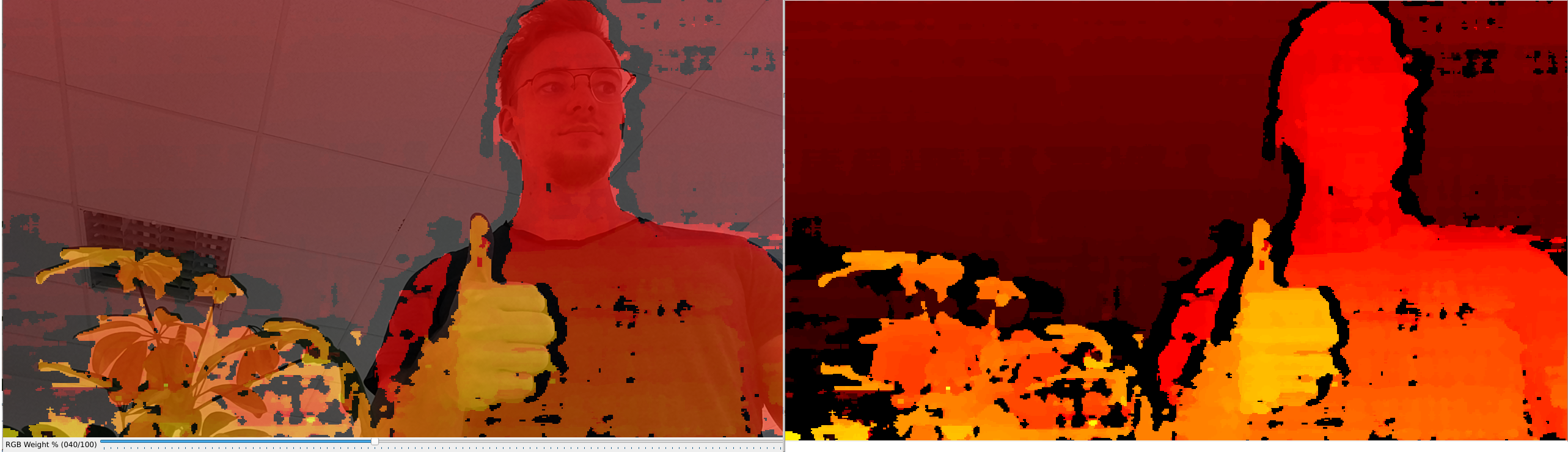

RGB Depth Alignment

You can also perform depth alignment with ImageAlign node (example), but we recommend using StereoDepth's alignment for better performance.

stereo.setOutputSize(width, height).To align depth with higher resolution color stream (eg. 12MP), you need to limit the resolution of the depth map. You can do that with stereo.setOutputSize(w,h). Code example here.Demo

Setup

Please run the install script to download all required dependencies. Please note that this script must be ran from git context, so you have to download the depthai-python repository first and then run the scriptCommand Line

1git clone https://github.com/luxonis/depthai-python.git

2cd depthai-python/examples

3python3 install_requirements.pySource code

Python

C++

Python

PythonGitHub

1#!/usr/bin/env python3

2

3import cv2

4import numpy as np

5import depthai as dai

6import argparse

7

8# Weights to use when blending depth/rgb image (should equal 1.0)

9rgbWeight = 0.4

10depthWeight = 0.6

11

12parser = argparse.ArgumentParser()

13parser.add_argument('-alpha', type=float, default=None, help="Alpha scaling parameter to increase float. [0,1] valid interval.")

14args = parser.parse_args()

15alpha = args.alpha

16

17def updateBlendWeights(percent_rgb):

18 """

19 Update the rgb and depth weights used to blend depth/rgb image

20

21 @param[in] percent_rgb The rgb weight expressed as a percentage (0..100)

22 """

23 global depthWeight

24 global rgbWeight

25 rgbWeight = float(percent_rgb)/100.0

26 depthWeight = 1.0 - rgbWeight

27

28

29fps = 30

30# The disparity is computed at this resolution, then upscaled to RGB resolution

31monoResolution = dai.MonoCameraProperties.SensorResolution.THE_720_P

32

33# Create pipeline

34pipeline = dai.Pipeline()

35device = dai.Device()

36queueNames = []

37

38# Define sources and outputs

39camRgb = pipeline.create(dai.node.Camera)

40left = pipeline.create(dai.node.MonoCamera)

41right = pipeline.create(dai.node.MonoCamera)

42stereo = pipeline.create(dai.node.StereoDepth)

43

44rgbOut = pipeline.create(dai.node.XLinkOut)

45disparityOut = pipeline.create(dai.node.XLinkOut)

46

47rgbOut.setStreamName("rgb")

48queueNames.append("rgb")

49disparityOut.setStreamName("disp")

50queueNames.append("disp")

51

52#Properties

53rgbCamSocket = dai.CameraBoardSocket.CAM_A

54

55camRgb.setBoardSocket(rgbCamSocket)

56camRgb.setSize(1280, 720)

57camRgb.setFps(fps)

58

59# For now, RGB needs fixed focus to properly align with depth.

60# This value was used during calibration

61try:

62 calibData = device.readCalibration2()

63 lensPosition = calibData.getLensPosition(rgbCamSocket)

64 if lensPosition:

65 camRgb.initialControl.setManualFocus(lensPosition)

66except:

67 raise

68left.setResolution(monoResolution)

69left.setCamera("left")

70left.setFps(fps)

71right.setResolution(monoResolution)

72right.setCamera("right")

73right.setFps(fps)

74

75stereo.setDefaultProfilePreset(dai.node.StereoDepth.PresetMode.HIGH_DENSITY)

76# LR-check is required for depth alignment

77stereo.setLeftRightCheck(True)

78stereo.setDepthAlign(rgbCamSocket)

79

80# Linking

81camRgb.video.link(rgbOut.input)

82left.out.link(stereo.left)

83right.out.link(stereo.right)

84stereo.disparity.link(disparityOut.input)

85

86camRgb.setMeshSource(dai.CameraProperties.WarpMeshSource.CALIBRATION)

87if alpha is not None:

88 camRgb.setCalibrationAlpha(alpha)

89 stereo.setAlphaScaling(alpha)

90

91# Connect to device and start pipeline

92with device:

93 device.startPipeline(pipeline)

94

95 frameRgb = None

96 frameDisp = None

97

98 # Configure windows; trackbar adjusts blending ratio of rgb/depth

99 rgbWindowName = "rgb"

100 depthWindowName = "depth"

101 blendedWindowName = "rgb-depth"

102 cv2.namedWindow(rgbWindowName)

103 cv2.namedWindow(depthWindowName)

104 cv2.namedWindow(blendedWindowName)

105 cv2.createTrackbar('RGB Weight %', blendedWindowName, int(rgbWeight*100), 100, updateBlendWeights)

106

107 while True:

108 latestPacket = {}

109 latestPacket["rgb"] = None

110 latestPacket["disp"] = None

111

112 queueEvents = device.getQueueEvents(("rgb", "disp"))

113 for queueName in queueEvents:

114 packets = device.getOutputQueue(queueName).tryGetAll()

115 if len(packets) > 0:

116 latestPacket[queueName] = packets[-1]

117

118 if latestPacket["rgb"] is not None:

119 frameRgb = latestPacket["rgb"].getCvFrame()

120 cv2.imshow(rgbWindowName, frameRgb)

121

122 if latestPacket["disp"] is not None:

123 frameDisp = latestPacket["disp"].getFrame()

124 maxDisparity = stereo.initialConfig.getMaxDisparity()

125 # Optional, extend range 0..95 -> 0..255, for a better visualisation

126 if 1: frameDisp = (frameDisp * 255. / maxDisparity).astype(np.uint8)

127 # Optional, apply false colorization

128 if 1: frameDisp = cv2.applyColorMap(frameDisp, cv2.COLORMAP_HOT)

129 frameDisp = np.ascontiguousarray(frameDisp)

130 cv2.imshow(depthWindowName, frameDisp)

131

132 # Blend when both received

133 if frameRgb is not None and frameDisp is not None:

134 # Need to have both frames in BGR format before blending

135 if len(frameDisp.shape) < 3:

136 frameDisp = cv2.cvtColor(frameDisp, cv2.COLOR_GRAY2BGR)

137 blended = cv2.addWeighted(frameRgb, rgbWeight, frameDisp, depthWeight, 0)

138 cv2.imshow(blendedWindowName, blended)

139 frameRgb = None

140 frameDisp = None

141

142 if cv2.waitKey(1) == ord('q'):

143 breakPipeline

Need assistance?

Head over to Discussion Forum for technical support or any other questions you might have.