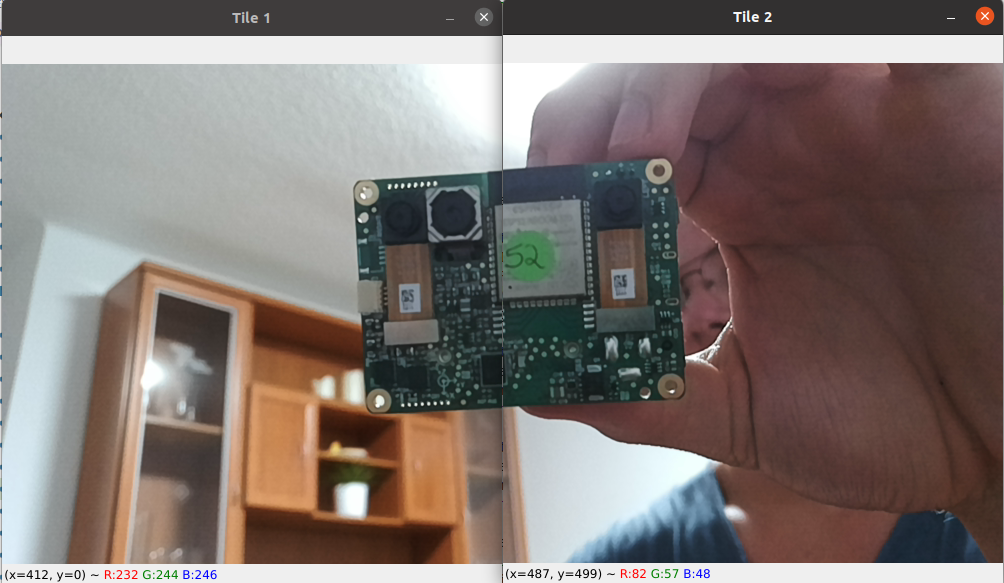

ImageManip Tiling

1000x500 preview frame into two 500x500 frames.Demo

Setup

Command Line

1git clone https://github.com/luxonis/depthai-python.git

2cd depthai-python/examples

3python3 install_requirements.pySource code

Python

C++

Python

PythonGitHub

1#!/usr/bin/env python3

2

3import cv2

4import depthai as dai

5

6# Create pipeline

7pipeline = dai.Pipeline()

8

9camRgb = pipeline.create(dai.node.ColorCamera)

10camRgb.setPreviewSize(1000, 500)

11camRgb.setInterleaved(False)

12maxFrameSize = camRgb.getPreviewHeight() * camRgb.getPreviewWidth() * 3

13

14# In this example we use 2 imageManips for splitting the original 1000x500

15# preview frame into 2 500x500 frames

16manip1 = pipeline.create(dai.node.ImageManip)

17manip1.initialConfig.setCropRect(0, 0, 0.5, 1)

18manip1.setMaxOutputFrameSize(maxFrameSize)

19camRgb.preview.link(manip1.inputImage)

20

21manip2 = pipeline.create(dai.node.ImageManip)

22manip2.initialConfig.setCropRect(0.5, 0, 1, 1)

23manip2.setMaxOutputFrameSize(maxFrameSize)

24camRgb.preview.link(manip2.inputImage)

25

26xout1 = pipeline.create(dai.node.XLinkOut)

27xout1.setStreamName('out1')

28manip1.out.link(xout1.input)

29

30xout2 = pipeline.create(dai.node.XLinkOut)

31xout2.setStreamName('out2')

32manip2.out.link(xout2.input)

33

34# Connect to device and start pipeline

35with dai.Device(pipeline) as device:

36 # Output queue will be used to get the rgb frames from the output defined above

37 q1 = device.getOutputQueue(name="out1", maxSize=4, blocking=False)

38 q2 = device.getOutputQueue(name="out2", maxSize=4, blocking=False)

39

40 while True:

41 if q1.has():

42 cv2.imshow("Tile 1", q1.get().getCvFrame())

43

44 if q2.has():

45 cv2.imshow("Tile 2", q2.get().getCvFrame())

46

47 if cv2.waitKey(1) == ord('q'):

48 breakPipeline

Need assistance?

Head over to Discussion Forum for technical support or any other questions you might have.